Sharp’s AI Partner – Providing Various Services to Suit Your Personal Lifestyle

January 21, 2025

In my previous post, I introduced our virtual AI trainer “AI Healthcare Trainer.” This is designed to provide casual healthcare consultations and exercise advice through conversation with an AI image on the living room TV screen. Well then, if there were a virtual AI concierge in your TV that could assist with product purchases and more, don’t you think it could open up new possibilities?

Sharp is developing a solution to realize such ideas using the large TV screen in your living room called AI Partner, where an AI avatar acts as your personal concierge, offering various lifestyle services.

AI Partner is equipped with Sharp’s proprietary edge AI technology CE-LLM (Communication Edge-LLM). It was showcased at our large-scale technology exhibition event, “SHARP Tech-Day’24 Innovation Showcase” (hereinafter referred to as Tech-Day) held in September last year, as well as at the comprehensive exhibition “CEATEC 2024,” which is one of the largest digital innovation exhibitions in Japan. It has garnered attention as a service of the near future.

This time, we interviewed three members of the development team: Nakata, Hayashi, and Sano.

●”CE-LLM” is a registered trademark of Sharp Corporation.

― What is “AI Partner”?

(Nakata) AI Partner is a solution that utilizes Sharp’s unique edge AI technology CE-LLM to provide a new viewing experience and various lifestyle support through the TV. In the future, we aim for the AI avatar on the TV screen to act as your personal concierge, offering various services through natural conversation while living together.

― What triggered the development and what was its aim?

(Nakata) We have been developing TV AQUOS and were exploring new values for TVs. We thought about how we could utilize the edge AI technology CE-LLM which is being developed by our R&D division and promoted company-wide to add new value to TVs. Devices equipped with CE-LLM realizes communication by verbal conversation, enabling development of a new UX (user experience) that does not rely on a remote control but by dialogue.

― What specific functionalities does it offer?

(Nakata) You can freely converse with AI avatar. For example, it can chat with users while watching videos or assist with product purchases. Additionally, we envision the AI avatar functioning as a portal for various services. Furthermore, you can create and switch between different AI avatars through conversation.

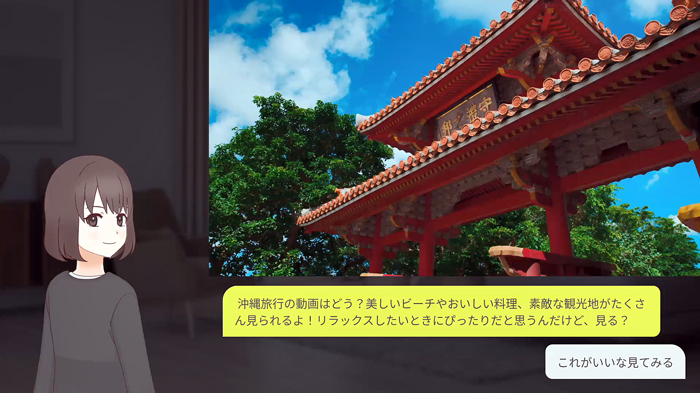

I will actually show you a demonstration. We will operate it using gestures and conversation, instead of using a remote control. When I wave my hand, AI avatar appears on the TV screen.

“Let’s watch a video together.”

↓

(AI) “Sounds good! Let’s pick a video. How about the Sharp Super Cup soccer match? It looks like an exciting game!”

↓

“Are there other videos?”

↓

(AI)”How about a video from Okinawa? It’s perfect for when you want to relax. Do you want to watch it?”

↓

“I like this one. Let’s watch it.”

↓

(AI)[Watching movie] “I want to relax. I wish I could stay here forever.”

In this way, AI avatar suggests recommended videos, comments in real-time based on the video’s content, and occasionally asks back questions. It also changes its expressions based on the conversation, showing smiles or frowns. It even responds with a smile to users’ jokes! If there’s no conversation for a while, it disappears as it assumes you’re focused on the video and, leaving only the video on the screen.

― It also supports users’ shopping, right?

(Nakata) Yes, let me demonstrate.

“I want to buy something.”

↓

(AI)”Great! Let’s go to the shopping screen. You watched a video from Okinawa, right? How about checking out aloe products?”

↓

“I want to buy something related to aloe.”

↓

(AI)”Okinawa’s aloe juice looks delicious! It seems healthy, so let’s give it a try!”

↓

“Okay, add ten of these to the cart.”

↓

(AI) “Alright, I’ve added ten Okinawa aloe juices to the cart. I can’t wait to see them.”

In this way, analyzing past conversations and videos viewed by family members allows for product recommendations and purchase support. You can also customize the AI avatar according to your preferences.

― ”What is the operational principle of AI Partner?”

(Sano) The AI Partner uses CE-LLM to assess user questions and conversations, generating response texts that AI avatar presents. The expressions and gestures of AI avatar are also determined by CE-LLM.

If it needs quick responses such as backchanneling, we utilize edge AI, while detailed information is accessed through cloud AI. Additionally, we make decisions to use edge AI for conversations involving personal information, such as birthdays.

(You can refer to a past blog post about CE-LLM. → “Talking freely with home appliances comes true? ~About CE-LLM : Sharp’s people-oriented edge AI technology~”)

― ”What did you do to ensure smooth conversations flow?”

(Sano) We adjusted the system to generate appropriate responses depending on the conversation scene, such as when watching videos or during free talk. To facilitate communication for users, we have also set it up so that AI avatar initiates conversations.

― “What did you work on the character of AI avatar or its display?”

(Hayashi) For the basic display solution, we collaborated with Live2D Co., Ltd., which has the technology to add three-dimensional animation to 2D illustrations. AI avatar, created as the main character of AI Partner, combines these elements. We named the AI avatar “Hanako Hayakawa,” sharing the same surname as our founder.

(Sano) As this is our first initiative to display an avatar, we made detailed adjustments to ensure natural movements and expressions, as well as synchronization between voice and mouth movements to create the appearance of actual conversation. Furthermore, we ensured smooth scene transitions instead of abrupt changes on the screen, adjusted background brightness, and darkened areas that are not in focus to enhance screen visibility.

― “What other challenges or efforts did you encounter during development?”

(Sano) The gesture to summon AI avatar is recognized and judged by the camera. However, unlike smartphones that recognize gestures from close distances, the AI Partner, installed on a TV, needs to recognize users from a bit farther away, which poses challenges as recognition rates drop with distance. We developed new technology to accurately recognize gestures from a distance, thereby improving recognition rates.

Additionally, at exhibition venues such as “Tech-Day,” the space is large, and capturing user voices amidst the noise is vital, which proved challenging. For this demonstration, we adjusted the microphone performance to ensure recognition even in noisy environments.

(Hayashi) In this project, we had to develop systems such as cameras and microphones, create the app for AI Partner, design the mechanism for installation on TV, and decide on the demonstration content and specifications, all within a short timeframe. The schedule was quite tight leading up to the “Tech-Day” exhibition, but we managed to address everything successfully. Looking back, I would say we worked with a good sense of tension. Thanks to this, both exhibitions, including the one at “CEATEC 2024,” allowed many people to experience our work and we received high praise.

― ”Finally, can you share your thoughts?”

(Sano) Although we developed this as a concept display, there are several challenges to practical implementation. We will consider the feedback and opinions from those who experienced the display to define what AI Partner supporting customers should be, and we aim to enhance the development elements towards commercialization.

(Nakata) We want to integrate it into all AQUOS TVs as a home concierge. Personally, I hope to expand the world where I can converse with my favorite characters.

(Hayashi) I believe that when people communicate with friends and family, share their perceptions and thoughts, and deepen their relationships, they tend to feel happier. I hope AI Partner can become a means of such communication. Besides AI Partner, I want to develop services that use AI to make people happy.

― Thank you very much.

I always wanted to have a personal concierge, but I thought it would be difficult to realize. However, with AI-based services, it seems that I can easily have a concierge. In the near future, even someone like me, who may not be known to have so many friends, might have a close companion. This interview made me think that way. I hope the AI Partner is put into practical use soon!

(Public Relations H)

<Related Sites>

■SHARP Blog:

Related articles

-

Sharp commemorates its 111th anniversary – “Sharp Technology Day” will be held on November 11, 2023

April 25, 2023

Sharp commemorates its 111th anniversary – “Sharp Technology Day” will be held on November 11, 2023

April 25, 2023

-

Say goodbye to being a quitter. Leave your living room exercises to AI Healthcare Trainer!

December 25, 2024

Say goodbye to being a quitter. Leave your living room exercises to AI Healthcare Trainer!

December 25, 2024

-

Exhibiting at “CEATEC 2024” – Introducing Sharp’s Efforts to Contribute to ESG and Advanced Technologies like AI –

October 16, 2024

Exhibiting at “CEATEC 2024” – Introducing Sharp’s Efforts to Contribute to ESG and Advanced Technologies like AI –

October 16, 2024

-

Exhibiting at CEATEC 2025 – Showcasing Sharp’s Initiatives to Bring Its New Corporate Slogan to Life

October 15, 2025

Exhibiting at CEATEC 2025 – Showcasing Sharp’s Initiatives to Bring Its New Corporate Slogan to Life

October 15, 2025

-

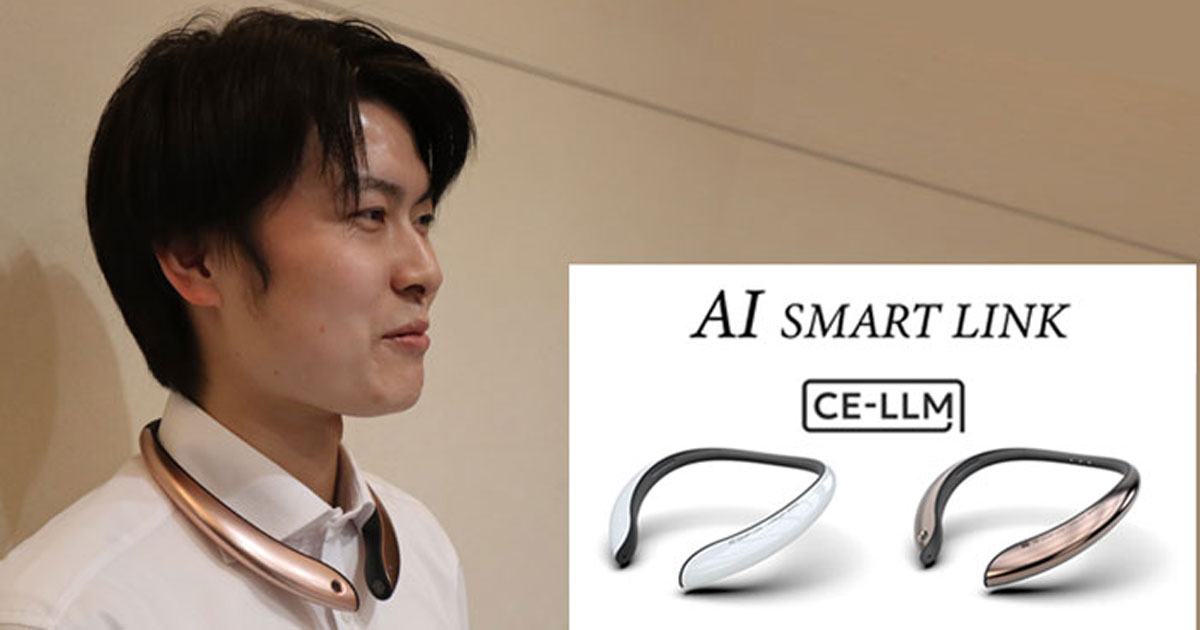

Sharp Develops People-Oriented Wearable Device AI SMART LINK – A Hands-Free Device Enabling Various Communication –

November 29, 2024

Sharp Develops People-Oriented Wearable Device AI SMART LINK – A Hands-Free Device Enabling Various Communication –

November 29, 2024

-

Sharp exhibits at CES 2024, one of the world’s largest technology trade show – Global debut of innovative technologies presented at SHARP Tech-Day –

January 9, 2024

Sharp exhibits at CES 2024, one of the world’s largest technology trade show – Global debut of innovative technologies presented at SHARP Tech-Day –

January 9, 2024

-

“TV in the near Future” Revealed at Hackathon Hosted by SHARP

November 21, 2023

“TV in the near Future” Revealed at Hackathon Hosted by SHARP

November 21, 2023

-

A click feeling as if you were pressing a button even if there is no button! What is the “Click Display”, a device of the near future? ①

April 14, 2023

A click feeling as if you were pressing a button even if there is no button! What is the “Click Display”, a device of the near future? ①

April 14, 2023

-

SHARP Tech-Day is on! Experience the near future with Sharp’s latest technologies

November 10, 2023

SHARP Tech-Day is on! Experience the near future with Sharp’s latest technologies

November 10, 2023

-

In the Past and in the Future, Sharp Continues to Lead the World in Television with AQUOS

November 21, 2024

In the Past and in the Future, Sharp Continues to Lead the World in Television with AQUOS

November 21, 2024