Sharp Develops People-Oriented Wearable Device AI SMART LINK – A Hands-Free Device Enabling Various Communication –

November 29, 2024

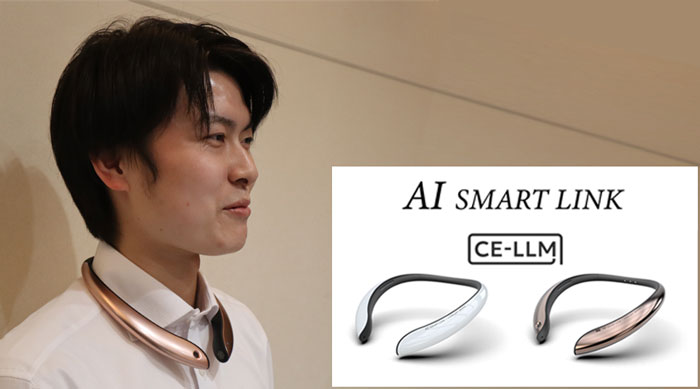

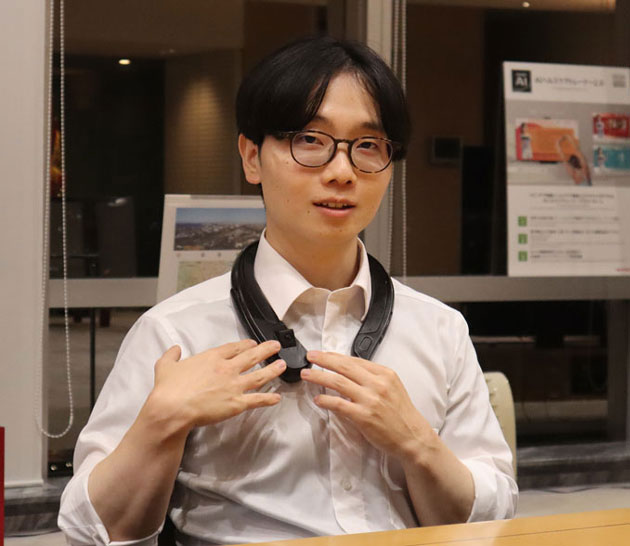

(left: non-camera model, right: camera-equipped model)

Have you ever had trouble abroad because you didn’t understand the local language?

I once experienced missing a concert I was looking forward to. I was supposed to take the subway to the venue, but I was in a hurry and my hands were full with luggage, so I couldn’t take out my smartphone to check. I jumped on a train that seemed right, but unfortunately, it was one of the few trains that diverged to a different location midway…

In such situations, wouldn’t it be convenient to have a product that tells you the content written in the local language on the train’s information board with your hands-free?

Sharp is currently developing a product that provides various hands-free AI support, such as breaking down language barriers and offering cooking guidance. This is the wearable device, AI SMART LINK, announced at our large-scale technology exhibition event SHARP Tech-Day’24 “Innovation Showcase” held in September 2024. It has been selected as the No.1 product in the 2025 Hit Predictions 100 by the popular lifestyle magazine, Nikkei Trendy *1 and is attracting attention. (→ Development of the wearable device “AI SMART LINK” Japanese News Release )

Here, we interviewed three developers, Higuchi, Ebihara, and Aota, about the features of AI SMART LINK currently under development and the convenient life it brings.

● Sharp Corporation is applying for the trademark, AI SMART LINK.

*1 Shoulder-type Wearable Private AI, including Sharp’s AI SMART LINK, ranked first. Sharp won the award together with Fairy Devices Inc.’s corporate device, THINKLET, and SOURCENEXT Corporation’s conversational AI device BirdieTalk. (→ Shoulder-type Wearable Private AI Category including Sharp’s AI SMART LINK Tops Nikkei Trendy’s “2025 Hit Predictions 100”!)

― What is AI SMART LINK?

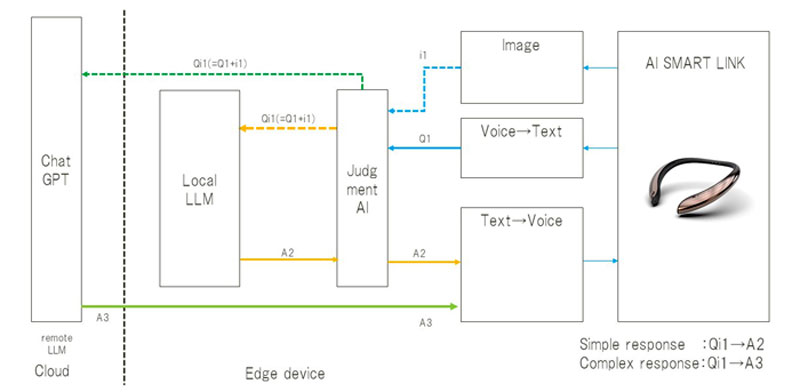

(Higuchi) It is a wearable device that realizes natural communication with generative AI using voice through a built-in microphone. It is equipped with our proprietary edge AI technology, CE-LLM (Communication Edge-LLM) under development, and provides hands-free support for users in various situations. The camera-equipped model can understand the user’s surroundings using the camera in addition to interactive conversation, enabling more accurate support.

(Ebihara) The edge AI technology, CE-LLM, can instantly decide whether to process the user’s query with the fast-responding edge AI in the device or accessing to cloud AI like ChatGPT, which provides abundant information, thus ensuring smooth and natural conversation.

(For more about CE-LLM, please refer to our previous blog post. → Talking freely with home appliances comes true? ~About CE-LLM : Sharp’s people-oriented edge AI technology~)

● CE-LLM is a registered trademark of Sharp Corporation.

● ChatGPT is a chatbot AI service developed by OpenAI that allows natural conversational interaction.

― Specifically what can it do?

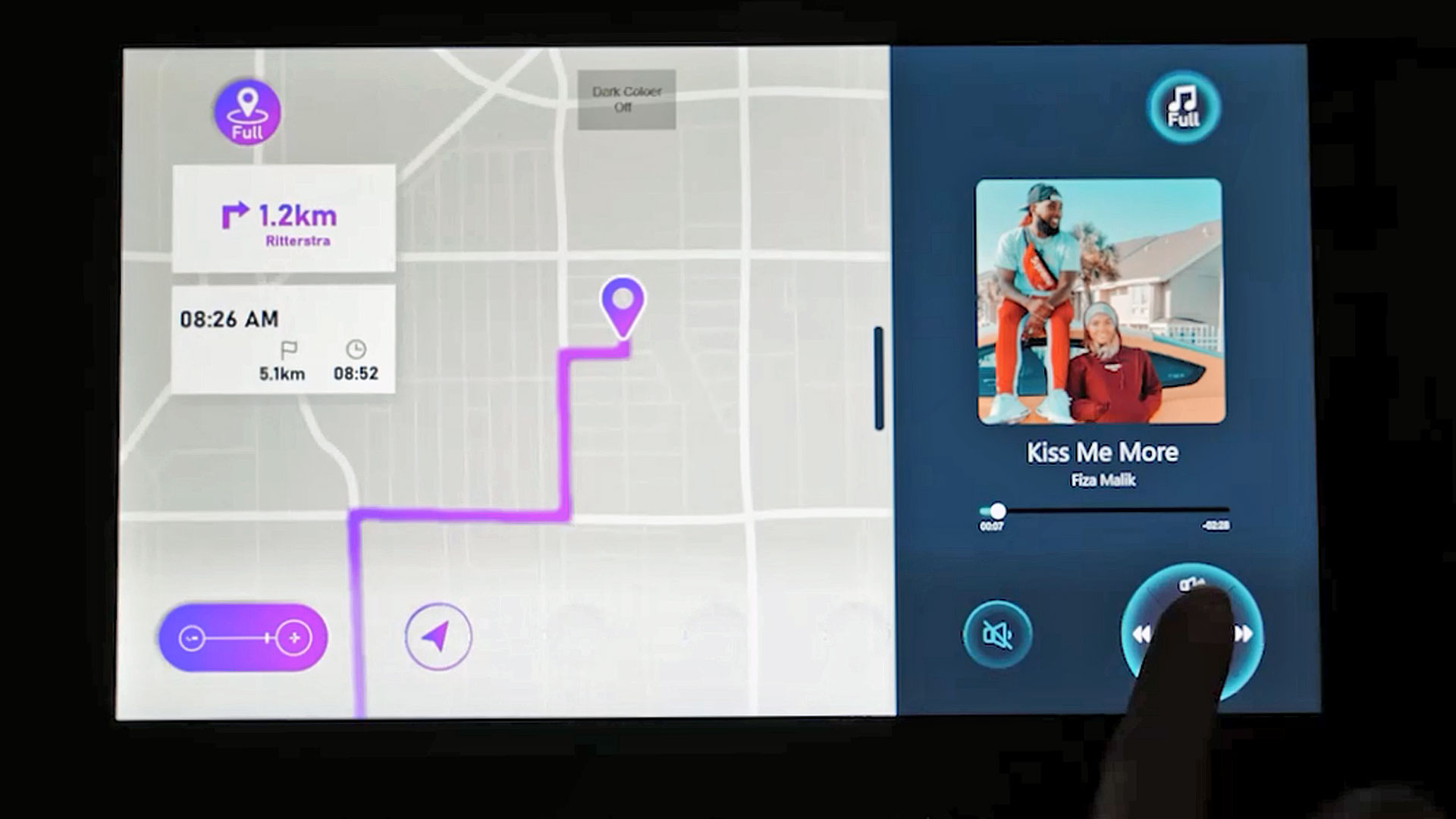

(Aota) For example, when riding a bicycle, by wearing AI SMART LINK around your neck, the AI can navigate you to your destination by voice. Since you don’t need to look at a screen like a smartphone, it helps preventing you looking away. Also, its built-in camera can recognize road signs and billboards, enabling more accurate navigation.

(Ebihara) It is also convenient for travelling. When abroad, you often use your smartphone to translate signs and posters in foreign languages. However, with AI SMART LINK, you can understand the content of signs captured by the camera without using your hands. You don’t even need to take out your smartphone.

Furthermore, for example, when a tourist from abroad visits Japan and sees a “Shachihoko” at a castle, they might not know its name and find it difficult to look it up. However, with AI SMART LINK, they can ask “What is this?” and the device will analyze the image from the camera and provide the information.

― It’s great that it can analyze the image from the camera hands-free. It would prevent failures like the ones I experienced abroad.

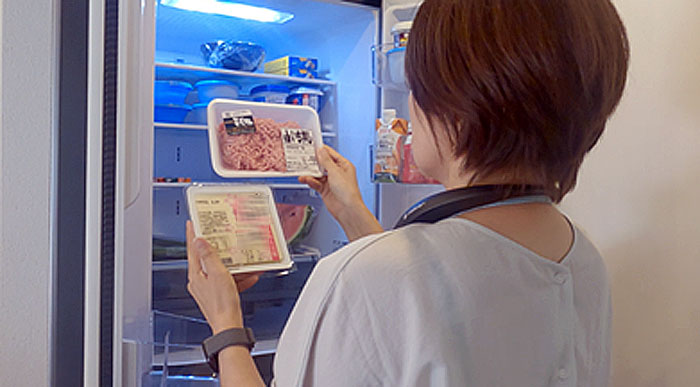

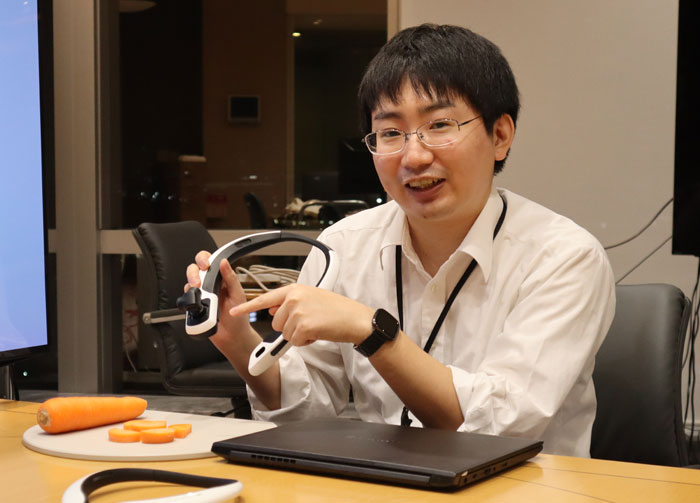

(Higuchi) It can also provide cooking guidance. You can speak to the device like “I’ve cut the carrot now. How does it look?” And it will check the condition with its camera and support the cooking steps through conversation, saying “Looks good. Let’s move on to the next step.”

Additionally, if you open the refrigerator and say, “I want to cook with the ingredients in the fridge,” it will check the ingredients inside with its camera, suggest menus that can be made with those ingredients, and download recipes to cooking appliances like Healsio once you decide on a menu.

We are developing it with the aim of creating a people-oriented product, so it can also engage in situational small talk. Even if you ask, “What is the highest mountain in Japan?” while cooking, it will answer. Various other possibilities can be considered beyond what we have introduced.

― What was the motivation for development?

(Ebihara) Our department is advancing AI-related development. At our first large-scale technology exhibition event, SHARP Tech-Day, held in November 2023, we unveiled a prototype developed based on the wearable AI speaker, AQUOS Sound Partner(Japanese website), and demonstrated AI-assisted dinner menu support. However, since it was developed based on our Sound Partner wearable speaker without a camera, it could propose menus through conversation but couldn’t understand the situation the user was experiencing. To realize Sharp’s AI direction, Act Natural, and provide more convenient solutions, we recognized the need for a product that could make more accurate situational judgments with a camera.

Additionally, we have developed our connection with Professor Ogasawara from Kyoto University of the Arts and Fellow at Sakura Internet, who is an IT evangelist*2 supporting various startups and consulting on AI matters. Through deepening our collaboration, the product concept of AI SMART LINK was concretized.

*2 A person whose role is to explain advanced and complex IT technologies and trends in an easy-to-understand manner to users.

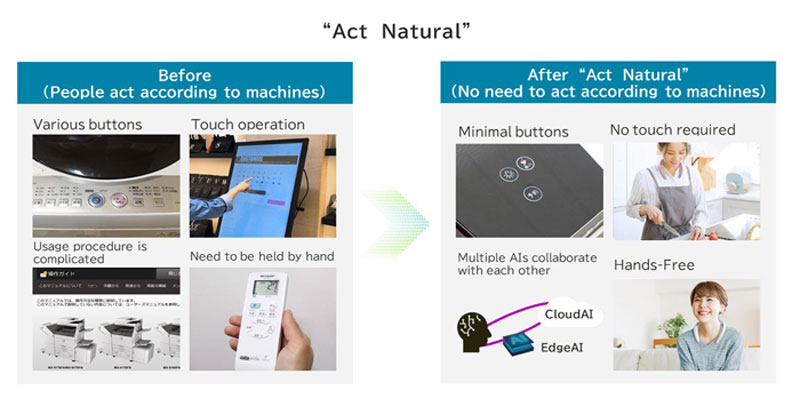

― What is Act Natural?

(Ebihara) Act Natural is the value provided by Sharp’s generative AI, condensed into two words describing how AI and humans interact. Until now, users have been required to understand complex usage procedures and press buttons or touch screens according to the operation methods of appliances or machines. Moving forward, AI will be more integrated with human experiences, providing support through natural actions and conversations. This is the concept we call “Act Natural.”

― So AI SMART LINK embodies Act Natural. What principles and processes are used to provide answers and support?

(Aota) As mentioned earlier, it uses the edge AI technology, CE-LLM. When a user speaks into the built-in microphone, the information is converted to text, and the AI determines whether the user’s question is simple or not. For simple questions, the edge AI in AI SMART LINK responds quickly. For complex questions, it relies on cloud AI, such as ChatGPT, for more detailed answers.

(Ebihara) CE-LLM was also exhibited at SHARP Tech-Day in 2023, but AI SMART LINK adopts an advanced version of CE-LLM that processes not only voice input but also images captured by the camera in parallel, making it multimodal and capable of answering questions using both voice and image information.

― I understand that smooth conversation is possible because of CE-LLM then.

(Ebihara) Yes. However, when using cloud AI to respond, communication with the cloud inevitably takes time. In the prototype demo at SHARP Tech-Day ’24 ‘Innovation Showcase, we recreated a scene where a sign in foreign language was read by the camera, and the edge AI identified the language. In this demo, the device responded “This sign is in German, right?” to fill the conversation gap until the cloud AI’s answer arrived. This also serves to confirm whether the information is what the user wants to know.

(Higuchi) Additionally, to ensure smooth responses, we set and adjusted prompts to generate answers from AI as concisely as possible. We are advancing development towards commercialization while making various efforts and taking on challenges.

― How was the response to the prototype exhibited at SHARP Tech-Day ’24 ‘Innovation Showcase?

(Ebihara) The response was beyond expectations. Many people asked, “When will it be released?”

(Higuchi) We demonstrated cooking guidance, and those who participated gave us feedback such as “It would be great if it had such and such features too.” Such feedback was both educational and stimulating.

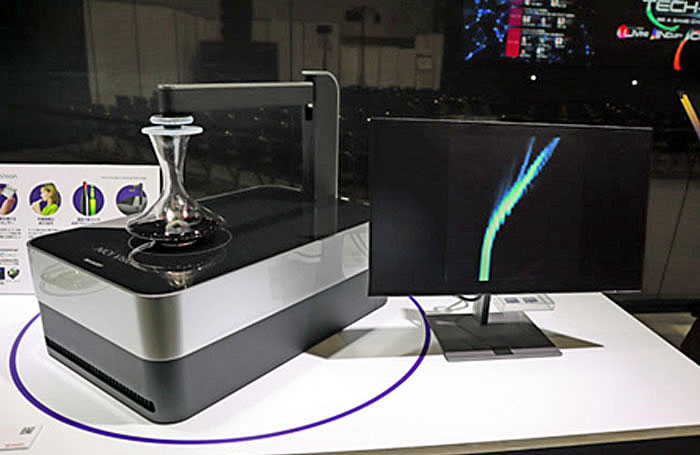

(prototype with a gimbal camera that can be angled freely)

(Aota) Many people visited our exhibition. As a freshman, it was my first experience of such kind and I really enjoyed it. Quite a few attendees mentioned, “It’s a Sharp-like product that we haven’t seen in a while and has a lot of potential.”

― When will it be commercialized?

(Aota) After verification testing with participation of students from Kyoto University of the Arts (from November 2024), we plan to launch products and services based on this concept within fiscal 2025. We appreciate your patience until then! We aim to commercialize both an entry model without a camera and a high-spec model with a camera for more accurate responses.

― At last, please share your thoughts on this development.

(Higuchi) I want to make conversations with devices more natural. In the future, I hope to develop products like AI robots in science fiction movies that solve problems logically but also have human-like aspects. I want to realize such a world and future at Sharp.

(Ebihara) I hope to develop a personal AI friend for everyone. I want to create a world where you can easily consult and interact with your dedicated AI. I think AI SMART LINK is the first step towards that goal.

(Aota) I hope AI SMART LINK will become popular as a new genre of home appliance. It would be great if AI SMART LINK becomes the standard remote control for home appliances.

― Thank you very much.

Many companies are currently considering various types of AI devices. Among them, shoulder-type AI devices, including AI SMART LINK, were ranked first in the “2025 Hit Predictions 100” by Nikkei Trendy magazine. In the future, hands-free shoulder-type AI devices like AI SMART LINK might become the mainstream of AI devices. Please look forward to the time when they are commercialized!

(Public Relations H)

<Related Sites>

■News Release: Development of the wearable device AI SMART LINK (Japanese)

■SHARP Blog:

SHARP Tech-Day’24 “Innovation Showcase” Is Now Open! Check Sharp’s Proposal for the Vision of Near Future(held in September this year)

Related articles

-

Talking freely with home appliances comes true? ~About CE-LLM : Sharp’s people-oriented edge AI technology~

March 28, 2024

Talking freely with home appliances comes true? ~About CE-LLM : Sharp’s people-oriented edge AI technology~

March 28, 2024

-

Improves operability with “Click Display” near-future device ②

April 19, 2023

Improves operability with “Click Display” near-future device ②

April 19, 2023

-

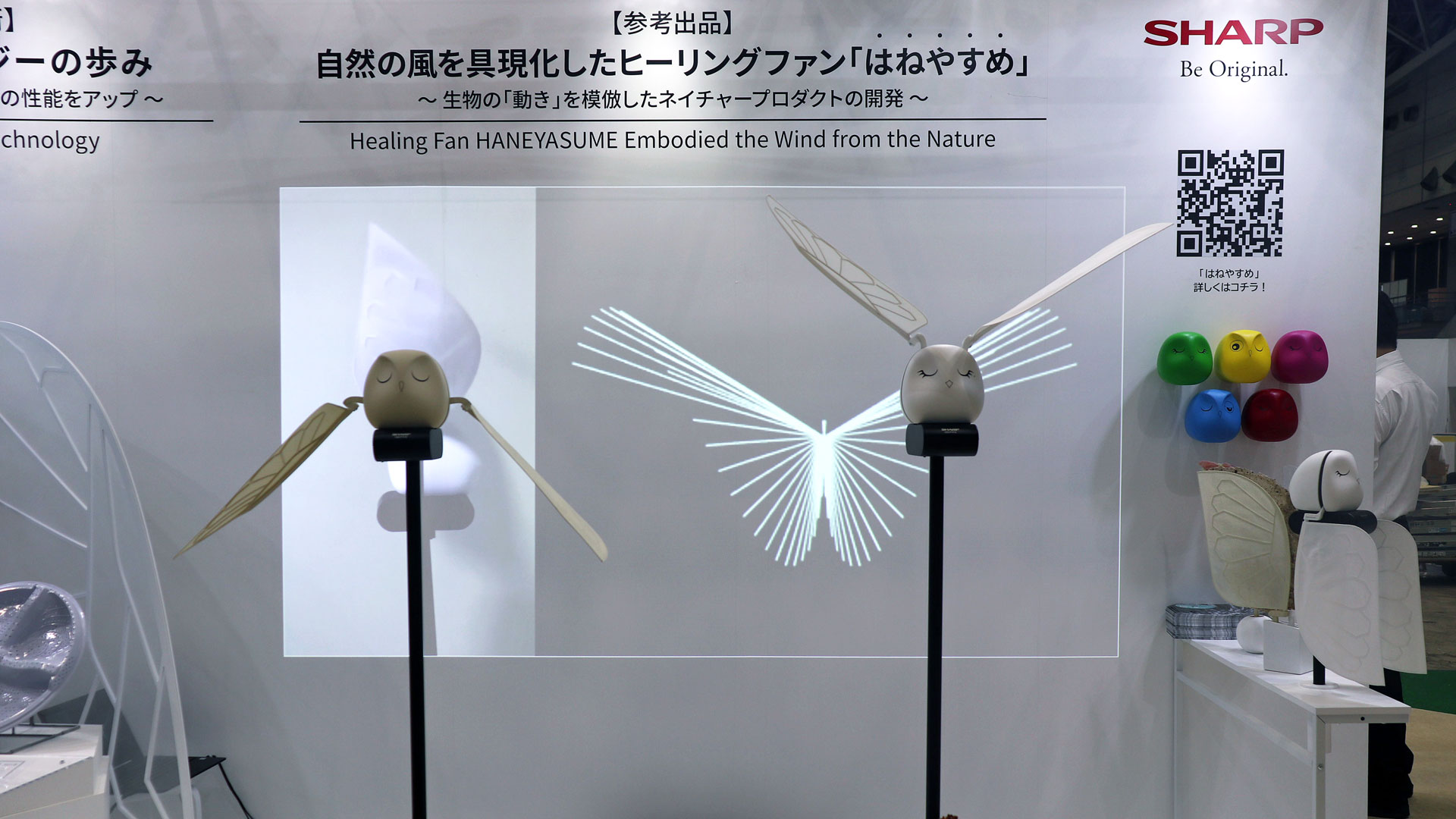

Sharp at CEATEC 2023! – Unique products such as Haneyasume and Click Display on Exhibit

October 19, 2023

Sharp at CEATEC 2023! – Unique products such as Haneyasume and Click Display on Exhibit

October 19, 2023

-

SHARP Tech-Day is on! Experience the near future with Sharp’s latest technologies

November 10, 2023

SHARP Tech-Day is on! Experience the near future with Sharp’s latest technologies

November 10, 2023

-

Solar panels mass-produced at an LCD display factory?

Solar panels mass-produced at an LCD display factory?

– Introducing the high-efficiency, low-cost Indoor Photovoltaic Device LC-LH Contributing to achieving SDGs – January 12, 2023 -

Detecting Wine Brands by Aromas! AI Olfactory Sensor Development①

January 26, 2024

Detecting Wine Brands by Aromas! AI Olfactory Sensor Development①

January 26, 2024

-

“TV in the near Future” Revealed at Hackathon Hosted by SHARP

November 21, 2023

“TV in the near Future” Revealed at Hackathon Hosted by SHARP

November 21, 2023

-

Inspired by Display Structure? AI Olfactory Sensor Development ②

February 6, 2024

Inspired by Display Structure? AI Olfactory Sensor Development ②

February 6, 2024

-

Exhibiting at CEATEC 2025 – Showcasing Sharp’s Initiatives to Bring Its New Corporate Slogan to Life

October 15, 2025

Exhibiting at CEATEC 2025 – Showcasing Sharp’s Initiatives to Bring Its New Corporate Slogan to Life

October 15, 2025

-

Sharp’s AI Partner – Providing Various Services to Suit Your Personal Lifestyle

January 21, 2025

Sharp’s AI Partner – Providing Various Services to Suit Your Personal Lifestyle

January 21, 2025